Carbon Accounting Management Platform Benchmark…

Unlocking Future Business Benefits: Navigating Challenges and Sourcing Quality Market Data under FRTB

The ability to apply accurate and relevant data is the key to unlocking future business benefits under the Fundamental Review of the Trading Book (FRTB) regime and the strengthening of the value-at-risk (VaR) framework for capital adequacy.

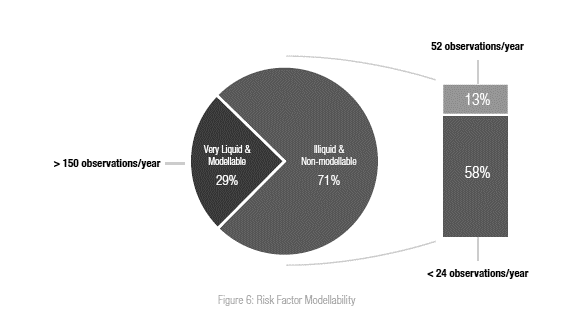

Among the many ambiguities of implementing the new regulation, internal modelling of market risk exposure is a well-known challenge across the industry. Without access to useful data, non-modellable risk factors (NMRFs) can result in suboptimal capital alignment with the underlying risks, which can undermine the viability of desk level internal models. In markets where data is scarce, sourcing quality market data will play a pivotal role in the difficulty of mitigating against non-modellable risk.

Introduced as a framework in 2016, the FRTB regime is planned for a phased rollout from 2022. The new approach utilizes an alternative method of measuring market risk using expected shortfall (ES) as the primary exposure measure instead of VaR, while also ensuring alignment of the front office desk models with market risk calculations for their internal models. FRTB represents the next generation of market risk regulatory capital rules for large, international financial institutions. It has also encouraged vendor solutions to develop alternative offerings that can help firms implement new risk management capabilities.

Many firms are investing significant time and effort to address these challenges as NMRFs can constitute a large proportion of the capital charges. As a result, Capco and Sia Partners have developed a set of methodologies and processes for identifying, evaluating, optimizing, and sourcing appropriate market data for risk management. We believe that these cornerstones are vital to ensuring ongoing robust risk management and capital adequacy framework. These components are relevant across all firms; whether they are looking to implement FRTB or raise the bar of their operating effectiveness and benefit from extended data and solution offerings.

The FRTB builds on Basel II.5s theme of providing more attention to managing credit risk exposure and reducing the market risk framework’s cyclicality by setting higher capital requirements. In 2018, the ‘industry’ – represented by the Internal Swaps and Derivatives Association (ISDA), the Global Financial Markets Association (GFMA), and the Institute of International Finance (IIF) emphasized where FRTB would ultimately impede on market-making activities and the operational fluidity of the global capital markets. These negative impacts could result in an emergence of rising trading costs when executing transactions, potentially leading to a reduction in overall market liquidity, which (ironically) could ultimately reduce the volume of transactional data available for risk factor price verification.

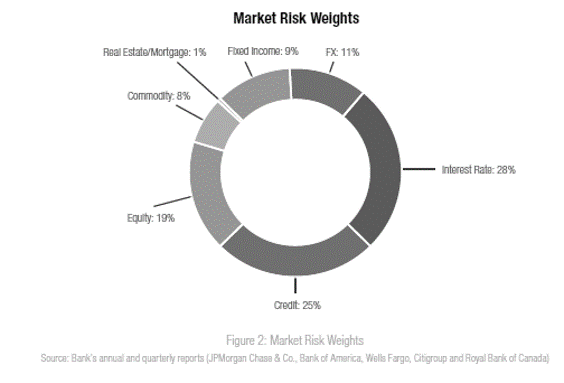

Global Systemically Important Banks (G-SIB) are already well underway with their FRTB implementation programs, by enhancing both the standardized approach (SA) and the internal model approach (IMA). Investment banks generally prefer expected shortfall (ES) over VaR because of VaRs inability to increase as portfolios are diversified. The top five North American banks (by market capitalization) are JPMorgan Chase & Co., Bank of America, Wells Fargo, Citi, and Royal Bank of Canada, and they make up a combined market value of 1.25 trillion. Identified from their annual reports, each measured VaR at the 99 percent confidence level for price movements over a one-day holding period. In aggregate, we find they are mostly exposed to interest rate risk and credit risk on an average annual basis of 28 percent and 25 percent, respectively (Figure 2).

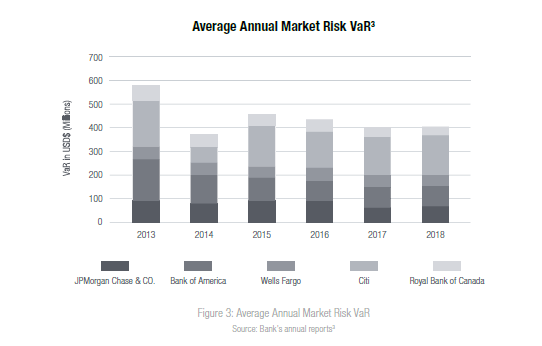

Overall market risk exposure has been gradually falling, with the total average annual market risk VaR across the top five banks being reduced by 31 percent from $590 million in 2013 to $403 million in 2018 (Figure 3)3. Many financial institutions are still voicing their concern regarding stricter capital requirements in conjunction with increased compliance costs reducing profits.

As a direct result of the capital cost penalties planned for products with non-modellable risk factors, firms are focusing their attention on reviewing their product coverage. To make sound business sense, the cost of transaction execution needs to be sustainable. Smaller, niche and boutique firms are potentially able to take a more significant market share of trading for products with high NMRF impacts, as a result of the more stringent capital adequacy impacts for G-SIBs.

For firms, their drive is to primarily manage ‘tail risk’ for market stability purposes and optimization of their capital utilization for profitability. In the future, there may be fewer players executing on illiquid or highly non-modellable products. In turn, the reduced availability of modellable price verification points will potentially make transaction data more valuable to vendors. This is opportunistic for those who wish to sell their data for the growing suite of vendor offerings.

As a direct consequence of FRTB, vendors have been eager to develop and monetize products to companies struggling to implement the full suite of data and lack computational capacity and ongoing monitoring tools. All firm types are implementing solutions, as they begin to recognize the operational and financial benefits of more effective market risk management. Niche execution houses that are not G-SIBs are often capital constrained and are investing in end-to-end platforms with embedded data, modelling and reporting capabilities. Firms are looking at top-down data providers to support tooling, as well as bottom-up technology services to access data within a flexible platform. Regardless of approach, one rule stays true - accessibility to accurate data remains key to success.

These stricter requirements place a higher burden on accurate and effective risk modelling, despite ongoing challenges to the procurement of quality data sets, especially regarding illiquid assets and NMRFs.

Due to a liquid market, a vast majority of FX risk factors should pass the risk factor eligibility test (RFET), such as highly liquid yield curves. However, banks are likely to run into problems when it comes to those longer-dated, medium to low liquid yield curves, such as PNL – WIBOR – 1Y. The extension of the observation period in the final rules had little effect on increasing the modellability of rates products, so banks can benefit from ensuring that they focus on this product class as a priority. The credit derivatives market has been diminishing since 2009, and as a result, we have seen a reduced number of market makers. If these market makers exit the industry, fewer real prices would be observed.

A study carried out by IHS Markit proved that the final rules had a significant effect on the modellability of single-name CDSs and cash bonds. For credit default swaps (CDSs), modellability had increased to 26 percent from 18 percent on a population of over 3,000 CDS issuer buckets. Despite the increase, the overall percentage is still relatively low, and as time goes by, banks will be expected to reconsider their holdings in non-modellable products due to the associated capital implications of keeping them on their books.

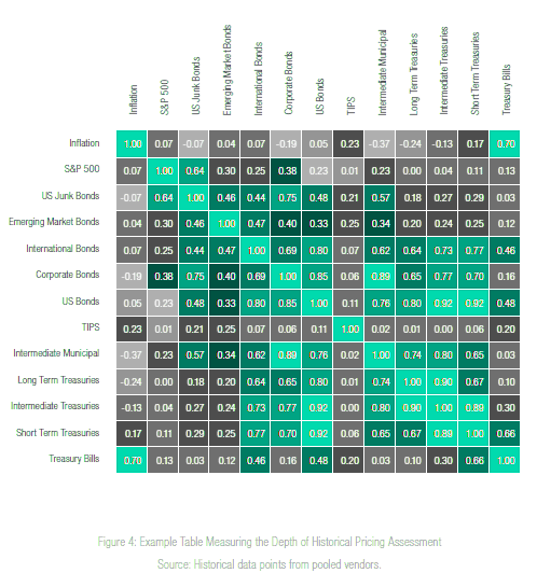

Many firms and vendors have undertaken studies to measure the availability of historical market data, the example is shown below in Figure 4, to understand how much depth there is in the price verification tests required for modellability. The findings highlight the disparate data points within the industry. This puts pressure on firms to ensure that data storage solutions and corresponding data models are robust enough to pool and integrate data from multiple sources (including capabilities for temporal storage). Firms must rigorously capture all internally generated data points from trading activity and strategically supplement this with data sourced from vendors.

Regulatory expectations and capital impacts result in a difficult balance between the costs and efforts involved with implementing and managing FRTB. Uncertainty remains to exist around determining the exact capital impacts by NMRFs, alongside ambiguities on the regulatory interpretation and introduction of capital floors subject to the standardized approach. Firms need to be able to measure and assess their trading book liquidity to be well placed with management information for decision making. Trading strategies and product coverage impacts are key to efficient implementation and capital cost containment. Undertaking an exercise to map and agree the product liquidity rankings can be an effective step towards identification and prioritization of market data gaps.

Asset classes and product types that are particularly challenging regarding market data availability and risk factor modellability include:

Other considerations for complexity include:

Having a robust framework for data evaluation and prioritization is essential for considering numerous impacting factors and allocating the resources of the project team and business. Our experience has led us to develop a set of standard evaluation criteria to customize the business priorities for each firm. Each identified gap, challenge, or opportunity can be measured consistently to allow the focus to be on gaps which are most likely to be resolved and deliver business benefits.

The criteria can be reviewed within the firm to establish specific ratings, key success criteria, and influence the scoring mechanism to suit the overarching business strategic principals.

| Factors | High | Medium | Low | |

|---|---|---|---|---|

| 1 | Trading volume | Significant & regular volume | Variable volume, cyclical | Occasional flow, special request |

| 2 | Revenue base | Core Revenue flow and goal for desk | Regular flow but not a core goal | Not core revenue/business goal |

| 3 | Number of impacted trading desks | Multiple desks or large desk impacted | Non-large desk or multiple low impacts | Single desk, specialist product |

| 4 | SA/IMA expectations | Desks aiming for IMA coverage | Flow could be moved to SA desk | Planned for SA desk only |

| 5 | Number of clients impacted | Multiple, broad usage by client base | Key clients trading product base | Specialist or small volume of clients |

| 6 | Product complexity | Regular liquid product | Long tenure or illiquid product | Structured or non-vanilla product |

| 7 | Data available internally/externally | Available with high quality externally | Available with patchy data only | Requires internal data to augment |

| 8 | Granularity availability | Existing data has appropriate buckets | Some require data extrapolation | Granularity consistently lacking |

| 9 | Data quality and consistency | Data has a historical and consistent quality | Data is patchy and can be inconsistent | Data lacks history and is low quality |

| 10 | External data vendor on-boarded | Additional data from existing source | Provider onboarded for another topic | New provider requiring setup |

| 11 | Identified risks/issues | No identified issues or risks | Known challenges with product | Existing MRAs/self-identified risks |

| 12 | Percent manual processing | Fully STP, no manual work required | Occasional manual mapping/ processing | Regularly requires manual processing |

| 13 | Likelihood of modellability | Risk factor data is readily available | Risk factor data is patchy/ inconsistent | Risk factor data is lacking/low quality |

| 14 | Strategic business choice | Key strategic product – a must | Legacy product – to be reconsidered | Grandfathered, not core business need |

| 15 | Regulatory constraints | Standard regulatory requirements – no special treatments | Some regulatory specific constraints to consider | Specific regulatory constraints which require special treatment |

| 16 | Geographical complexity | No additional geographical complexity | Minor geographical impacts to consider | Significant or complex geographical considerations |

An important aspect of FRTB specific data that firms often overlook is an adaptable, dynamic, and robust data practice with an established collaboration between the FO, market risk and middle office. FO generates and owns the raw and derived data, whereby Market Risk continues to build upon this data set with risk factor time series data with specific requirements for data granularity. Therefore, for data to be available at the quality ready to be utilized, a firm should develop a holistic approach across the firm’s operating activities. Centralizing the data can support issue mitigation of operational end-to-end data quality, by ensuring a fully vetted capital data lineage classification approach before metrics are derived. Data quality requirements are driven initially by the real-time accessibility for pricing in the FO, which can then be scrubbed further for downstream Market Risk usage, including product classification and proxying methodologies. Metrics and computations vary across asset classes, and any errors in these classifications can, in turn, increase stress and force an inaccurate capital adequacy ratio.

Furthermore, an FRTB data solution can alleviate manual errors and help to address costly errors from an operational perspective. Often the efforts are constrained by silo’s, and challenges exist from:

Given the complex modelling involved with FRTB data, specific metrics are difficult to accurately quantify on an ongoing basis and rely upon access to a consistent and accurate set of reference data.

Through our experience working with large institutions, firms often perceived these gaps as quick fixes to the existing data practice, usually asset class based silos. However, they can lead to significant failures in the execution of the risk and pricing models, or worse - inaccurate model outputs. Simple data gaps like a misrepresentation of a security (e.g., bonds with a call/put optionality) could result in misidentification of the bond as being option-free. This results in the underestimation of market risk with lower VaR figures.

Gaps in a security’s attributes data, for example:

Regardless if there is access to a valid data feed, these gaps will still result in the risk model being unable to price the security accurately and ultimately may lead to incorrect capital calculations.

Although ad-hoc data fixes can alleviate these data pain-points, a centralized data source approach will act as a strategic solution. ‘Golden source’ data practices can provide the opportunity for users to tackle these data gaps systematically. For example, developing automated processes with enhanced manual checkpoints can establish a framework of consistent data quality controls. While the cost of maintaining this data can increase, alternative solutions such as offshoring data cleansing procedures can be considered.

While many gaps may have been identified, the business prioritization of which ones to solve first is predominantly focused upon three objectives:

We believe that firms can better optimize their data by leveraging our defined matrix for data gap evaluations (Figure 7), to understand priorities across a range of capital advantages. Given the evaluated availability of data – both internally and externally – a firm can prioritize on data needs for resolving the most important and achievable revenue or product flow.

The IMA is the best-case scenario to achieve lower capital charges. To illustrate the benefit on the IMA versus SA, the industry conducted an impact study using a sample of 33 banks with quality data. The findings demonstrated FRTB capital for trading desks under IMA is 3.21 times larger than the capital based on current IMA rules. To have trading desks govern the process, they are first required to be: (1) nominated by the bank and, (2) satisfy back-testing, PLA tests, and capitalization levels. A rigorous process of stress testing is essential to standardize ES by applying a dataset with a sample size of 10 years history (at minimum). Depending on the class of risk factor, market liquidity is integrated into the application by certifying a liquidity horizon range between 10-250 days. Under this approach, although preferably ideal, securing market data on assets with varying liquidities is a challenge to ensuring regulatory model approvals.

SA is the easier fall-back method for determining capital charges if the bank fails on implementing an internal model. The SA capital requirement stems from the summation of three components; (1) risk charges under the sensitivities-based method, (2) the default risk charge, and (3) the residual risk addon. Under this method, the financial institution must provide a regular disclosure report, for all trading desks.

The FRTB requires banks to source reliable data to comply with standards. For banks that wish to use IMA, they must pass the RFET test, which places a strain to source, organize, and map data for the risk factors. A June 2017 report by the ISDA, GFMA, and IIF highlighted that 36 percent of the IMA capital charge will be attributed to NMRFs. When adopting IMA, there is immense value in measuring capital charges by favorable risk weightings. If left unattended, the potential consequences include so-called cliff effects between SA and IMA - mainly due to banks losing approvals due to insufficient market data.

NMRFs could result in over-capitalization, poor capital alignment with the underlying risks, and may undermine the viability of IMA. Classified as a capital add-on under the ES model, the BCBS addresses the dilemma of risk modelling for instruments lacking sufficient price observations. For a risk factor to be considered as ‘modellable,’ the BCBS states that there must be ‘continuously’ available ‘real’ prices for a representative set of transactions. The criteria to establish whether a price is ‘real’ include a price that is (1) an institutional conducted transaction or (2) a transaction between other arms-length parties or (3) obtained from a committed quote.

Morgan Stanley looked at a representative sample of U.S corporate bonds and discovered in their findings that only 43 percent of the assets meet the one-month gap rule. By extending the allowable time-gap between months to 90 days, they found that 60 percent of the same representative sample would satisfy a 3-in-90-day test. Implying that trading activities of liquid products could follow cyclical patterns - resulting in failing the one-month gap rule.

The failure of the one-month gap rule compromises the firm’s ability to obtain IMA approvals and subject the firm to take the standardized approach and their impact on capital relief and in turn, their trading strategies. Consequently, potentially harmful impacts could result in an emergence of rising trading costs when executing transactions, longer durations for counterparties pairing, and ultimately, a reduction in overall market liquidity. Understanding NMRFs is still a process that is evolving as developments between the regulator and the industry continue to grow. The anticipation is that a large number of risk factors are to be non-modellable. The ISDA and the Association for Financial Markets in Europe (AFME) identified the most relevant and common risk factors as:

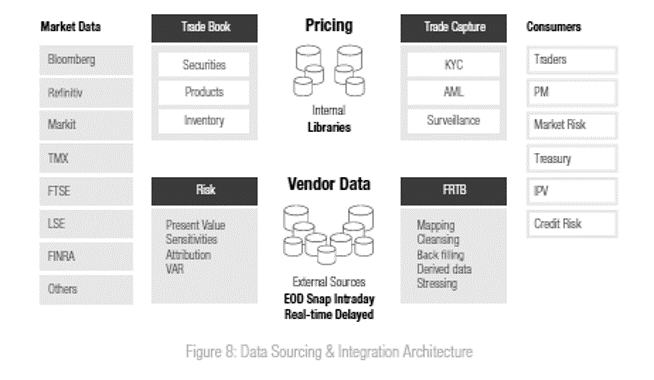

Contiguously, there is an increased need for storing history of market data, with the observation horizon for determining the most stressful 12 months spanning back to (and including) 2007 at a minimum. This means banks require reliable and flexible storage for time series data, with the ability to consolidate data sources to fill data gaps. The need for good quality data to evaluate, monitor, and manage is critical; including the ongoing data quality processes for eliminating inconsistencies among information sources and preventing inaccurate data from preexisting. Carefully managing the accurate outcome of capital calculations will significantly impact a firms’ investment decision strategy with the potential for creating a meaningful governance framework for the future. Depending upon the business need, the sourcing of good quality market data from a diverse set of sources may be the key to success; including the capability to consolidate across internal and external sources. Ultimately, market risk is the consumers and must define a consolidated data set of risk metrics to analyze gaps, understand systemic issues, and map data behaviors. The challenge is sourcing compatible and accurate market data and integrating the enormous volume of data to meet the FRTB needs.

The challenge for all banks is that in some asset classes or products, such as those with long maturities or less liquid underlyings, their trading volume is not high enough to meet the modellability criteria. The usual data vendors, exchanges, and trade repositories often do not have these complex products in their data sets or post-trade information required to meet regulatory approval. Recent research shows that a mix of pooling and proxying will deliver the best capital impact on banks. However, only 34 percent of respondents globally have a ‘pretty good idea’ of their proxying strategy, and 38 percent of respondents have not started thinking about it yet, the survey said. A significant proportion of the market, therefore, risks falling behind the first movers who are well advanced with their proxying strategies.

Vendors are developing tooling in this space – collating and cleansing the data, removing duplicates and aggregating transaction history in the same instrument into one complete time series. Such a series can give a more accurate indication of an instrument’s liquidity than an individual bank’s data in isolation. And such an NMRF utility, if set up well, can ensure that contributing banks can maintain ownership and control of their data and have their data in a secure place but can still get the benefit of a shared portfolio view.

The future market-data environment will see contributions from several large vendors, trade repositories, and industry utilities. The concept of pooling multiple banks data to achieve a sufficient number and frequency of transactions are often grassroots industry initiatives beginning to take shape. Yet, the success of these initiatives is dependent on the contributing banks IP ownership, including the control and security of their critical transaction data.

To design and implement a robust market data solution, firms should conduct a current-state assessment of market data capabilities and infrastructure. This requires a thorough understanding of key requirements, technology architecture and operational effectiveness. From a current state perspective, firms must consider whether internally stored data satisfies the RFET, as well as stressed expected shortfall requirements.

To address the prioritized requirements, each asset class (e.g., credit) needs to be assessed in its component products (e.g., corporate bonds) and then sub-products (e.g., high yield versus investment grade), which are mapped to risk factors. Additionally, a risk factor can be broken down into a more granular level of risk drivers (points on a curve/surface). These buckets provide a mapping schema for RFET observations to be used as criteria in the sourcing decision.

Banks can choose to address data gaps wholesale by designating a centralized source that accommodates FRTB alongside other market and credit risk data requirements. Ultimately, a firm can benefit significantly by investing in a centralized market data source that aggregates data from internal systems of record alongside external vendor data. Benefits of this approach include centralized governance and oversight structures to promote data quality and efficient delivery to multiple systems, models, and users. Firms have also benefited from developing specialized ‘risk security masters’ that serve as golden sources of data with 24/7 data availability, enhanced data sets, and calculations.

Improving a firm’s FRTB pricing observations strategy is a key concern for firms implementing the new regulation.

The key drivers for data-related change in the RFET are twofold. Firstly, the new requirements have a stricter criterion for what constitutes a real price - banks can no longer rely on price observations such as trader marks. Secondly, FRTB prescribes a strict bucketing criterion for price observations so firms must ensure that they have the appropriate depth and granularity of data for relevant price observations. Banks can review coverage with a risk factor catalog that has all risk factors from pricing models mapped to products and real prices. Firms are likely to find that their quarterly VaR completeness exercise can be a good starting point to achieve the risk factor catalog.

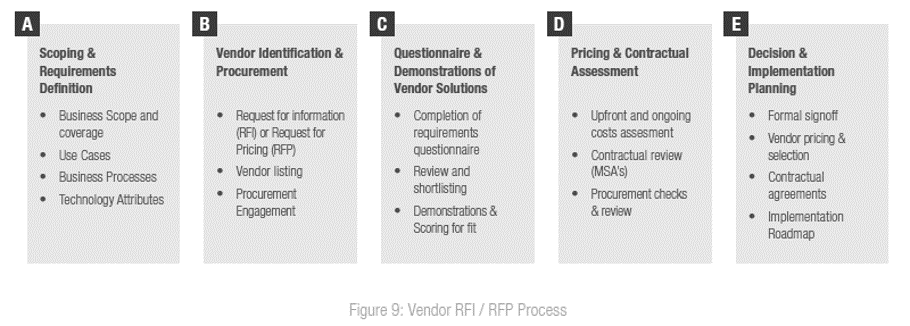

To establish a vendor as an ongoing data provider which meets the data requirements for FRTB, we recommend a formal RFI/RFP approach where the companies are compared for service, data quality, and pricing.

For firms that are considering collaboration with a vendor or multiple vendors to address gaps, there are several factors to consider:

Firms can also expect improvements in overall efficiency due to technology upgrades and cost savings resulting from redundancy reductions. Tracking these benefits over time can help justify spend and create a strong case for a firm to invest in a robust market data solution.

Understanding both the one-time and ongoing costs associated with building out the market data function for FRTB is essential for an effective business decision. One-time costs include documenting requirements and scope, customizing the platform, establishing vendor data feeds and STP for business processes as well as one-time purchases of historical data to backfill missing time series. Ongoing costs include data purchases to satisfy change requests, subscription costs (monthly) to data storage and ongoing data governance/quality control functions.

As a high-level control framework, we reference a methodical approach for selecting vendors through an RFI / RFP process – as below. However, our previous engagements have taught us that the selection of risk market data solutions often requires additional, customized and expert-driven assessments to get into the detail and solve specific scenarios.

If we consider the problems holistically, as a comprehensive data operating vision, the needs for the data span from risk mitigation through to cost control, increased governance, and ongoing accuracy with suitable availability, these multi-directional business cases require a careful and detail-oriented approach – for which we have developed a range of accelerators to support evaluation of the individual issues.

To make a vision become a reality, practical advice and support are required to enable change. When clients are selecting services to support the implementation of enhanced market data, there is a wide range of offerings.

| Project Governance & Resourcing | Business Requirements & Future State Definition | Industry & Data Sourcing Expertise | Technology & Data Architecture |

|---|---|---|---|

| Clear and scalable program governance structure | Business requirements definition including data, analytics, and reporting, as well as ‘playbooks’ for agile implementations | Assessing and qualifying a firm’s securities of interest against capital impact | The current state of architecture review and future state options, as well as gap analysis results |

| Resourcing support for the reporting teams and market risk capital calculations | Alignment of FO to Risk to Finance data (trade data, reference data, market data, and risk data), process and operating models | Sourcing expertise on Market Data products and services including where to find or build high-quality data sets for use in IMA | Technology solution designs, including architecture, infrastructure and vendor selections |

| Multi use-case approach for efficiency, e.g., BCBS239 teams to leverage existing knowledge and resources | Capability for desk level reporting and model validations | Market structure expertise across global markets specific to structured securities with NMRF characteristic. | Governance & data quality stewardship – current & future ‘consolidated’ state |

| Assessment of business unit engagement, communication, and adoption of change | Approaches for minimizing NMRF | Calibration of datasets through techniques such as evaluated vendor services to strengthen the IMA approval process | Technology assessment – development and ongoing maintenance for the platform consolidation |

| Quality acceptance and signoff for applications and user experience – conversion, testing, and data quality | Auto Scenario Generation | Cross product gap analysis with product prioritization, vendor sourcing, and internal coverage maps | End to end data lineage analysis with upstream, downstream and golden source assessment |

| Assessment of business processes and operating model to support the consolidated platform | Target end-state definition – to meet the needs of the business before & during the consolidation | Management and governance for design authority for strategic modular enhancement |

Taking a wholesale approach to market data, including investment in optimized sources across internally and externally available data, can benefit a firm beyond FRTB. Benefits include independent price verification, internal models under the SA-CCR regime, CCAR, risk, FO valuation and intraday risk management. If firms focus solely on FRTB in a silo, redundancies are likely and certain requirements may fall through the cracks. This can result in firms buying the same data twice and potentially missing out on opportunities for capital relief. For many banks, FRTB acts as an incentive to exit unprofitable businesses and offers firms the ability to adjust their trading strategies accurately, dependent on the availability of relevant data.

Given the challenges faced by many firms on a global basis, the vendor environment has been exploring new tooling and approaches to optimize the sourcing of data and computational platforms to develop enhanced risk management capabilities. Capco and Sia Partners believe that this is the right time to develop longer-term strategies and consider alternative approaches, as the next generation of risk frameworks provide firms with information to manage their trading strategies, pricing and risk management with greater accuracy.