Canadian Hydrogen Observatory: Insights to fuel…

How your company can benefit from streaming analytics using Apache Kafka

Enterprises are moving from offline batch processing to online real-time streaming analytics in order to benefit from acting to signals from their clients, their assets and their business context. Think customer interactions on e-commerce platforms, think energy trading of renewable production, think real-time fraud detection in financial transactions. That is where Apache Kafka, or just Kafka, comes into play!

More than one-third of all Fortune 500 companies use Kafka. These companies include top 10 travel companies, 7 of the top 10 banks, 8 of the top 10 insurance companies, 9 of the top 10 telecom companies [1].

Fig. 1 Example of Kafka users [2]

To capture the benefits of data as business happens, we need streaming analytics. In this case, quickly moving and utilizing all of this data from point A to point B becomes as important as the data itself.

“quickly moving and utilizing all of this data from point A to point B becomes as important as the data itself”

Traditionally, companies have used the Enterprise Message Bus (ESB) systems to integrate and connect their data applications. While these solutions from TIBCO, MuleSoft, Microsoft and Oracle solve certain issues of coupling multiple applications into a concerted whole, these solutions inherently rely on non-scalable mechanisms to pass data between applications and lack core support for analytical capabilities. Kafka and its streaming analytics concepts are completely different and not only exchange data, but combines real-time event triggered messaging with data storage and analytical processing capabilities. We observe that many companies are migrating to Kafka for these combined capabilities [3].

Kafka was developed at LinkedIn and later donated to the Apache Software Foundation. It is named after the writer Franz Kafka [4]:

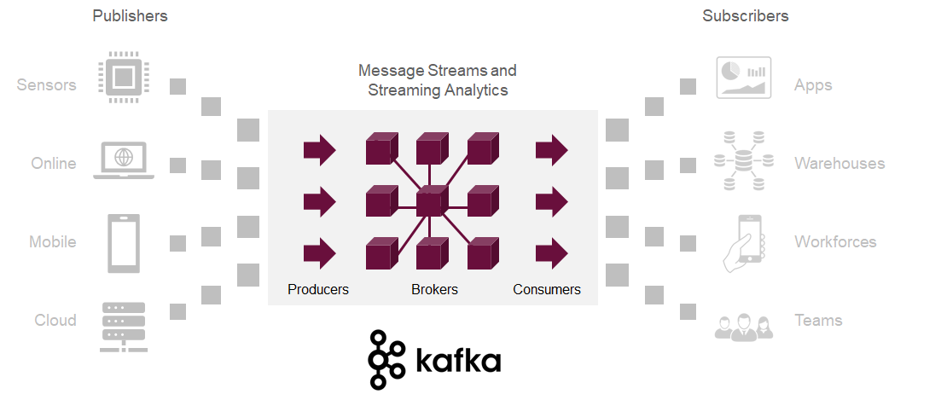

In Kafka, one data piece is called a message, for example: “customer X viewed product Y” could be a message. These messages are sent into so-called message queues or streams, for example, we could have a stream called “customer interests” where real-time messages from all web stores are sent to. Sending data into such a stream is called publishing. Getting data from such streams is called subscribing. When we react to streams using logic rules, statistics or even machine learning, this is called streaming analytics.

While the messaging stream concept is similar to message queue systems, it’s more than that. It also allows storage of streams in a fault-tolerant way and process streams of messages in real-time. It is a perfect fit when the enterprise applications need to react quickly to specific events.

Fig. 2 Streaming analytics pipeline

Kafka is highly adaptable due to its horizontal scalability. This means that Kafka isn’t limited by its software design, but is simply limited by the amount of hardware or virtual cloud resources it has access to. This is made possible by having many small and identical nodes effectively working in parallel as one whole, also referred to as a distributed cluster computing system. Kafka is also strongly fault-tolerant. This means that Kafka’s architecture is based on many small components that act as backup of each other. For example, data is always copied to multiple brokers in case one broker goes down due to an unexpected hardware or software error.

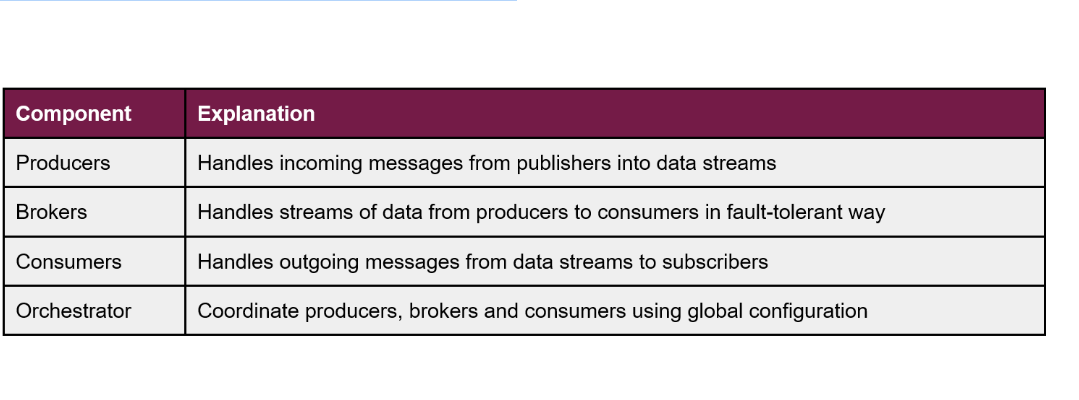

Kafka consists of several key components. There are producers, brokers, consumers and an overarching orchestrator. The orchestrator is actually a separate open-source software package called “Apache Zookeeper” or Zookeeper in short

This architecture makes Kafka highly scalable and reliable at handling massive work-loads. Finally, the fault-tolerance features of Kafka is attained by having multiple copies of the same data into partitions with overlap - this is called replication. A replication factor of 3 means that there are always 3 copies of the same message available in 3 different parts of the Kafka ecosystem. This ensures reliability in case of outages or errors and can help speed-up certain operations. The figure below illustrates the Kafka components.

Fig. 3 Components in a scalable Kafka ecosystem

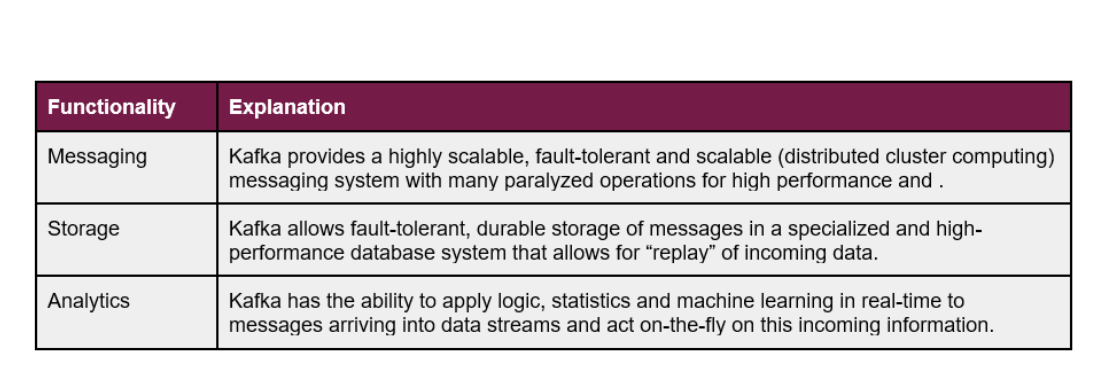

Technically, Kafka can be used for three different technical functionalities which have traditionally been handled by different systems [5]. Kafka is a messaging system, a storage system, like a specialized database, and an analytics platform.

At Sia Partners, we serve clients in many different industries. We focus in this article on three particular sectors: energy, finance and banking, and marketing and customer experience. Here we focus on the stream-analytics use cases as it is where Kafka shines.

With stream analytics, companies can increase their ability to spot potential opportunities and risks before it is too late, tap into data that is always on (e.g. sensors, machine logs, weblogs, connected devices) and react quickly to changing conditions (e.g. identify fraud, replace faulty machinery).

Energy and Environment: Going from a batch-wise operation to real-time streaming operations within the energy sector unleashes new use cases or improves current operations manyfold. A major aspect in unlocking this potential comes from the true integration of Operation Technology (OT), such as SCADA systems, with the Information Technology (IT), such as client portals, front-desk applications and analytical dashboards. We see the main use cases within the energy sector to be within fraud detection, outage management, asset management, workforce optimization, client support and trading decisions.

Finance and Banking: Real-time stream processing can greatly reduce the response time of banks to their regulators, customers and enable faster action to outside attackers.

Marketing and Customer Experience: Many potential benefits can be realized by using real-time reaction to changes in customer preferences, product prices, external events and competitors’ moves within the space of marketing and customer experience. Kafka can help to realize the potential of moving with or ahead of the market using the latest signals.

These use-cases all show the potential of Kafka within completely different business, but with an underlying framework of immediate reaction to events happening right now!

Traditional businesses have relied on offline analytical capabilities and more companies are moving towards online and in-the-moment reaction to real-time signals from the market and their customers. Winning in this new era of real-time every second counts and being able to outrun competitors and more swiftly react to incoming risks lies huge potential.

We think streaming analytics is highly potent and it could disrupt many established business operations from offline to real-time. Starting to experiment with core ideas and the technology of Kafka and it’s streaming analytics capabilities could be highly beneficial to your business. But where to start to seize these new opportunities and solve some of your business problems in this new way? Curious about what stream analytics can do for your business? Please feel free to contact us!